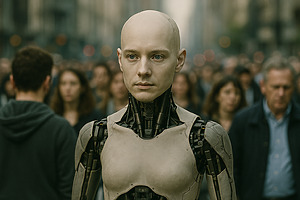

With astonishing advances in Artificial Intelligence, robotics, and computer science, the once-distant idea of human-like machines – artificial humanoids – capable of thinking and deciding, no longer belongs to science fiction. The arrival of general AI and superintelligent systems may be a few decades away.

As these intelligent entities become increasingly autonomous and lifelike, we are faced with profound questions: What is the moral, legal, and socio-political status of human-like AI systems, especially artificial humanoids? What impact might artificial humanoids have on human society from moral, social, and political perspectives?

Researchers aim to address these and other related questions. One of them is Prof. Dr John-Stewart Gordon, who recently joined the KTU FSSAH community as a Chief researcher. He is currently on a research stay at the Institute for Ethics in AI at the University of Oxford (May 15–August 31), where he is working on a new project titled “The Moral and Socio-Political Status of Artificial Humanoids”.

Artificial humanoids might live among us?

Superintelligence, which is a term to describe Artificial Intelligence systems that can think, learn and make decisions significantly better than the brightest human minds, is expected to emerge at some point in the future.

Using an inclusive approach, the researchers will attempt to determine what ethical frameworks and practical measures are needed to ensure a peaceful and just coexistence between humans and artificial humanoids.

The relationship between artificial humanoids and human beings

Some scholars have voiced serious concerns about the potential dangers of superintelligent machines. If humanity fails to solve the so-called control and alignment problems, superintelligent AI could pose an existential threat. How can society govern or contain entities that vastly outperform humans in every cognitive domain? So far, there is no clear answer.

According to Prof. Dr J. S. Gordon, the possible solution lies in aligning the values and goals of these advanced systems with human moral norms, ensuring that their actions, no matter how autonomous, remain compatible with human well-being. Yet, whether such alignment is truly achievable remains one of the greatest unanswered questions of our time.

Prof. Dr J. S. Gordon proposed updating human rights laws to include a Universal Convention for the Rights of AI Systems, giving legal recognition to advanced AI. Similarly, he also offered to create a fair approach in the moral and social-political fields that considers the moral interests of artificial humanoids. This approach would address the new social and political challenges that could arise if superintelligent machines, especially humanoid AI, become a reality.

The professor says that this project is not just an academic exercise but a crucial endeavour to prepare society for the challenges and opportunities of an AI-driven future, as it aims to guide policymakers, technologists, and the general public in making informed decisions about the inclusion of AI in society.